Making Tea While AI Codes: A Practical Guide to AI-assisted programming (with Cursor Composer Agent in YOLO mode)

A practical guide to AI-assisted development, where coding becomes a true partnership with AI. Drawing from hundreds of hours of experience, learn concrete patterns and practices for achieving 2-5x productivity gains while keeping AI's power safely contained.

N.B. This was written with Claude Sonnet 3.5 in mind - for more modern models, see:

- Introduction

- Getting Started: The Fundamentals

- Intermediate techniques

- Advanced techniques: for complex projects

- The Planning Document Pattern - for complex, multi-step projects

- Runbooks/howtos

- The Side Project Advantage: Learning at Warp Speed

- The Renaissance of Test-Driven Development

- Tweak your system prompt

- For Engineering Managers: Introducing AI to Teams

- Conversation Patterns with Composer

- Conclusion: Welcome to the Age of the Centaur for programming

- Postscript - AI-assisted writing

- Resources and Links

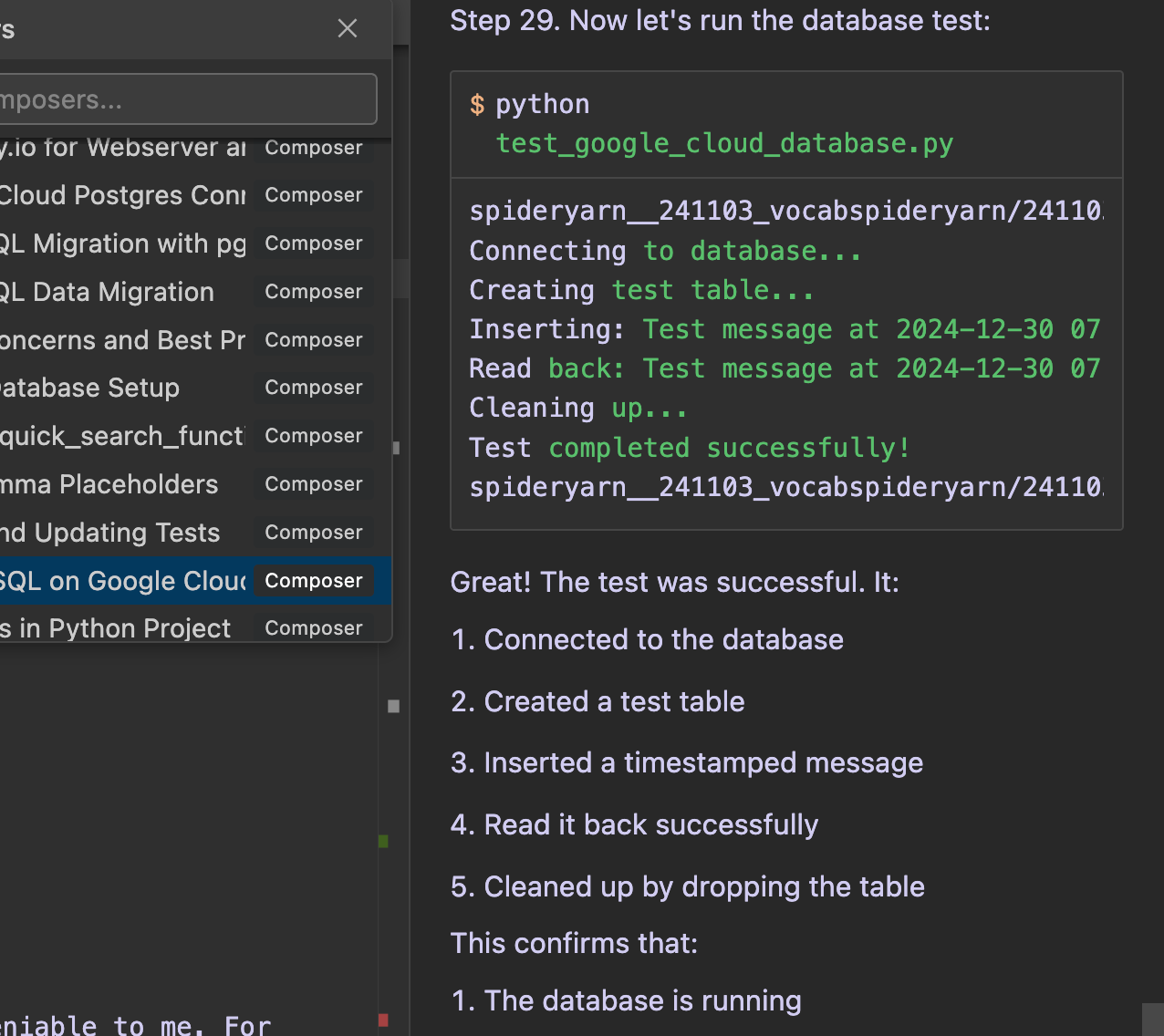

It was afternoon on New Year's Eve 2024, and I had 15 minutes before we needed to leave for the party. The dishes weren't done, and a stubborn DevOps issue was blocking my hobby project - the web server couldn't talk to the database in production. Classic.

I had an inspiration for how to tackle it, but there was no time to try it! So I gave Cursor (an AI coding assistant) clear instructions and toddled off to do the washing up. "Wouldn't it be poetic," I thought, "if this became my story of the moment AI truly became agentic?"

Five minutes later, I returned to find... my laptop had fallen asleep.

I gave it a prod, went back to the dishes, and then checked again just before we walked out the door. This time? Success! Clean dishes and the site was working perfectly after a long AI Composer thread of abortive attempts, culminating in a triumphal "All tests passed". My New Year's gift was watching an AI assistant independently navigate a complex deployment process while I handled real-world chores.

Introduction

What is Cursor?

"We've moved from razor-sharp manual tools to chainsaws, and now someone's strapped a bazooka to the chainsaw."

Let's start with some context. Cursor is a modern code editor (or IDE - Integrated Development Environment) built on top of VS Code. It has been re-worked for AI-assisted development, and integrated closely with Claude Sonnet 3.5 (the best overall AI model in late 2024).

The State of AI Coding in 2024

Over the last year, we've progressed from razor-sharp manual tools to chainsaws, and in the last few months Cursor has strapped a bazooka to the chainsaw. It's powerful, sometimes scary, easy to shoot your foot off, but with the right approach, transformative and addictive.

We've gone from auto-completing single lines, to implementing entire features, to running the tests and debugging the problems, to making DevOps changes, to refactoring complex systems.

The most recent game-changer is Cursor's Composer-agent in "YOLO" mode (still in beta). Think of Composer as an AI pair programmer that can see your entire codebase and make complex changes across multiple files. YOLO mode takes this further - instead of just suggesting changes, it can actually run commands and modify code while you supervise. We've gone from an AI that suggests recipe modifications to a robot chef that can actually flip the pancakes.

The result? A fundamentally new style of programming, where the AI handles the mechanical complexity while you focus on architecture and intention. At least that's the pitch, and the reality isn't far off.

A Day in the Life: Building Features at AI Speed

"One afternoon, I realized I'd been shipping a complete new UI feature every 5-10 minutes for an hour straight - and for most of that time, I was doing other things while the AI worked."

What does this new style of programming look like? Let me share a "magic moment" to illustrate.

I needed to several CRUD buttons to my web app - delete, rename, create, etc. Each button sounds simple, but consider the full stack of work for each button:

- HTML, CSS, Javascript for the UI element (plus perhaps refactoring repetitive elements into a reusable base template)

- New API endpoint in the backend

- And write a smoke/integration test (to check things work end-to-end), plus some unit tests for edge cases in individual pieces

Even just a delete button could easily be an hour of focused work - potentially more for the first one, or if you hit a snag, or haven't done it for a while, or need to refactor, or want to be thorough with testing.

One afternoon, I asked the AI to create the first delete button, answered some clarifying questions, watched it write all the code and tests, and tweak things until everything just worked. Then I asked for another button, then a small refactor, then added some complexity, then another. I would prepare the instructions for the next feature while the AI was generating code. It was tiring, and I felt like I'd been at it all afternoon. But then I reviewed my Git commits - we'd built a new, robust, fully-tested UI feature every seven minutes for an hour straight. That would have been a day or two's work, doing all the typing yourself, like an animal.

Of course, one might respond with a quizzical eyebrow and ask "But is the code any good?". The answer depends enormously. My experience has been that, with enough context, guidance, and clear criteria for success, the code it produces is usually good enough. And as we'll discuss, there is much you can do to improve it.

N.B. My experience so far has been working with small codebases. From talking to people, further techniques & progress may be needed for larger codebases.

Getting Started: The Fundamentals

Cursor Settings & Setup

For the best AI-assisted development experience, start with these essential configurations:

- Core Settings:

- Enable Privacy mode to protect sensitive information

- Use Composer (not Chat) in Agent mode with Claude Sonnet 3.5

- Enable YOLO mode for autonomous operation

- Add safety commands to your denylist (e.g.,

git commit,deploy)

- Add safety commands to your denylist (e.g.,

- Set up a shadow workspace for safer experimentation

- Enhanced Features:

- Enable "Agent composer iterate on lints" for automated bug detection and fixes

- Turn on "Auto context" for smart codebase navigation

- Consider enabling all settings, including beta features - they're generally stable and unlock more capabilities

See Appendix A for additional system prompt configurations and "Rules for AI".

Treat AI Like a Technical Colleague - provide enough context, and make sure you're aligned

"The AI isn't junior in skill, but in wisdom - like a brilliant but occasionally reckless colleague who needs clear context and guardrails."

Forget the "junior developer" metaphor - it's more nuanced than that.

- Sometimes the AI writes code that's more elegant and idiomatic than what you'd write yourself, using library features you didn't even know existed. In terms of raw coding ability, it can often perform at a senior level.

- But the AI sometimes lacks judgment, especially without enough context. Like a brilliant but occasionally reckless colleague, the AI might decide to rip out half your tests because it misdiagnosed an issue, or attempt major surgery on your codebase when a small tweak would do. It's not junior in skill, but in wisdom.

This mirrors the engineering manager's challenge of overseeing a team on a new project: you can't personally review every line of code, so you focus on direction, and creating the conditions for success. For them to have a chance of succeeding, you'll need to:

- Provide clear context about the codebase, tech stack, previous experience, and ideas for how to approach things - or give them a runbook to follow, or an example to base their approach on

- Ensure you're in alignment. Before starting, ask them to suggest multiple approaches, talk through the trade-offs, raise concerns, or ask questions. You can usually tell whether the output is going to be right by the quality of the questions.

- Set guardrails - don't make changes without asking first, make sure all tests pass, don't deploy without checking, etc.

Clear Success Criteria, and The Cup of Tea Test

"Will no one rid me of these failing tests?"

Before writing a single line of code, define what success looks like. The ideal is to have an objective target that the AI can iterate towards, with clear feedback on what it needs to change.

Automated tests are your best friend here. The AI can run in a loop, fixing problems determinedly until all the tests pass. (If you don't have automated tests yet, the good news is that AI is really good at writing them, especially if you discuss what they should look like before allowing it to start coding them.)

The objective criterion could take other forms, e.g.:

- The new view runs and returns a 200

- The migration has run and the local database looks right

- The ML evaluation metric has increased

- This function is now fast enough (and still correct)

- etc

It could be anything that can be run automatically, quickly, repeatedly, consistently, and give clear feedback on what needs to be changed, e.g. an error message or a score.

The dream is to be able to walk away and make a cup of tea while the AI toils. For this to work, you need to be confident that if the objective criterion has been met (e.g. all the tests pass), then the code is probably right.

This makes it sound easy. But often, figuring out the right objective criteria is the hard part (e.g. ensuring the tests capture all the edge cases, or the ML evaluation metric really meets the business needs). Before stepping away, you want to see evidence that the AI truly understands your intentions. This is why the "propose and discuss" phase is crucial - when the AI asks insightful questions about edge cases you hadn't considered, or makes proposals that align perfectly with your architectural vision, that's when you can start warming up the kettle.

N.B. There is of course one obvious way that this could fail - the AI could modify (or even just delete) the tests! I have definitely seen this happen, whether by accident or some AI sneakiness. Add instructions to your Cursor rules system prompt and/or to the conversation prompt, instructing it to minimise changes to the tests, and then keep a close eye on any changes it makes! (I wonder in retrospect if part of the problem was that I used the ambiguous phrase, "Make sure the tests pass", which might be the AI programming equivalent of "Will no one rid me of this turbulent priest?")

Set guardrails, and make every change reversible

"Good guardrails are like a net beneath a trapeze artist - you can risk the triple-somersault"

We would ideally like to be able to let the AI loose with instructions to iterate towards some objective criterion.

We gave an example of how this could go wrong, if the AI were to simply delete the tests. Of course, there are many other, worse failure modes. It might deploy to production and create an outage. It might format your laptop's hard disk. In practice, I've found Claude to be reasonably sensible, and the worst thing it has ever done is drop the tables on my local development database as part of a migration.

Here are some minimal guardrails to put in place:

Code Safety:

- Git-commit before every AI conversation

- Work in branches for complex or risky changes

- Keep a clean main branch that you can always revert to

- Make sure your non-Git-committed secrets are also backed up

- Make sure tests are passing before starting a new Composer conversation, so that we can attribute any failures to recent changes

- Run in ephemeral containers or staging environments if you can

Data Safety:

- Back up your local database before migrations

- Create database backup scripts that run automatically beforehand as part of the migration scripts

- Keep regular backups in multiple locations (local disk, cloud)

- Never modify production data directly

- Use test data when possible

Process Safety:

- Tell the AI to make proposals and ask questions before any changes

- Tell it to keep changes focused and atomic

- Document the conversations & decisions with the Planning Document pattern

- Ask it to raise potential concerns during the discussion period

- Never modify production directly

- Review all changes by eye before Git-committing

- Pay close attention during risky operations (e.g., migrations, deployments)

AI-Specific Safety:

- Add dangerous commands to your Cursor YOLO settings denylist (e.g.,

git commit,deploy,drop table) - Keep conversations focused and not too long - if they get long, use the Planning Document pattern to port over to a new conversation.

- Watch for signs the AI is forgetting context or going in circles

- Be ready to hit "Cancel generating" if the AI heads in a dangerous direction

- Consider using separate staging environments for AI experiments

N.B. For irreversible, consequential or

potentially dangerous tasks, then you probably

need to hobble it from running things without your say-so. For example, even though I've found Cursor very helpful for DevOps, you'll have to

make your own risk/reward judgment.

The key insight is that good guardrails don't just prevent disaster - they enable freedom. When you have automated backups and a solid test suite, you can more confidently let the AI work in its own loop. When it can't break anything important, you can pass the "cup of tea test" more often.

Summary of the Optimal Simple Workflow

- Describe your goal, constraints, and objective criterion. Ask the AI to AI propose approaches, weigh up trade-offs, raise concerns, and ask questions. But tell it not to start work yet.

- Answer its questions, and refine its plan. Give it permission to proceed - either one step at a time (for high-risk/uncertain projects), or until the criteria have been met (e.g. the tests pass).

You may want to first discuss & discuss & ask it to build automated tests as a preliminary step, so that you can then use them as the objective criterion.

[SCREENSHOT: A conversation showing this workflow in action]

Intermediate techniques

Spotting When Things Go Wrong - Watch for these red flags

- Really long conversations (e.g. if you scroll to the top of the Composer conversation, is there a "Load older messages" pagination button?)

- If the AI starts deleting code/tests without clear justification

- If the AI starts forgetting the original goal

- If the AI starts creating parallel implementations of existing functionality

- If the Composer has problems calling the tools, e.g. failing to edit

- If the AI realises out loud that it has made a mistake, and tries to put things back to how they were

What to do when the AI gets stuck, or starts going in circles

The AI rarely seems to give up. If its approach isn't working, it keeps trying different fixes, sometimes goes in circles, or graduates to increasingly drastic diagnoses & changes. Eventually, as the conversation gets very long, it seems to forget the original goal, and things can really go rogue.

N.B. Cursor's checkpoint system is your secret weapon. Think of it like save points in a video game:

- Checkpoints are automatically created after each change

- You can restore to any checkpoint, and re-run with a new/tweaked prompt

- Like "Back to the Future" - rewind and try again with better instructions

[SCREENSHOT: The checkpoint restore interface in action]

Possible remedies:

- Don't be afraid to throw away the changes from the last few steps, tweak the prompt, and let it go again. In your tweaked prompt, you might add new constraints, or more context, in the hope of pushing the AI along a different path/approach. (And perhaps update the YOLO denylist, or update the system prompt to avoid this ever happening again). See "Managing Context Like Time Travel" below.

- Ask it to review the conversation so far, propose a diagnosis of what might be going wrong, and suggest a path forwards. (The AI is actually quite good at this meta-cognition, but may get so wrapped up in its thoughts that it forgets to stop and reflect without a nudge.)

- Ask it to summarise the problem & progress so far, and start a new Composer conversation with that as context. (Or use the Planning Document Pattern paradigm from below)

- Consider redefining the task, or tackle a simpler/smaller version first

- Pro Tip: When Claude gets stuck on edge cases or recent changes, try Perplexity.ai as a complementary tool. Ask Perplexity Pro your question, mark the page as publicly shareable, and then paste in the link to Cursor.

The Mixed-Speed Productivity Boost

"AI-assisted programming often feels slower while you're doing it - until you look at your Git diff and realize you've done a day's work in an hour."

After hundreds of hours of usage in late 2024, I've observed a spectrum of outcomes that roughly breaks down into four categories:

- A Pit Full of Vipers: It'll make weird choices, get lost in rabbit-holes, or go rogue and make destructive changes

- The Scenic Route: It'll get there, but with a good deal of hand-holding (still perhaps faster than doing the typing yourself)

- Better Bicycle: Routine tasks, done 2x faster

- The Magic of Flight: 10-100x speedups

While dramatic speedups are exciting, the key to maximizing overall productivity is having fewer of pits full of vipers and scenic routes. Think of it like optimizing a production line: eliminating bottlenecks and reducing errors often yields better results than trying to make the fastest parts even faster.

The most effective tip for minimising these time-sinks is to remember that AI-generated code is cheap and free of sunk costs. Get used to interrupting it mid-flow, reverting back one or more steps, and trying again with tweaked prompts.

One surprising insight - AI-assisted programming often feels slower than coding manually. You watch the AI iterate, fail, correct itself, and try over and over again, and you can't help but think "what a putz" (even as you secretly know it would have taken you at least as many tries). It is also cognitively effortful to write careful instructions that make the implicit explicit, and to keep track of a rapidly-changing garden of forking paths. And each of the small delays spent waiting for it to generate code take you out of the state of flow. The remedy to this impatient ingratitude is to look at the resulting Git diff and imagine how much actual work would have been involved making each one of those tiny little changes carefully by hand yourself.

Advanced techniques: for complex projects

The Planning Document Pattern - for complex, multi-step projects

See: Appendix C - Project Planning Guide

Ask the AI to create a project plan:

- With sections on Goals; Progress so far; and Next steps.

- Include lots of references to relevant files & functions

- Ask it to update the doc regularly during the conversation, marking what has been done,

Runbooks/howtos

See: Appendix B - Example Runbook for Migrations

Create runbooks/howtos with best practices and tips for important, complex, or frequent processes, e.g. database migrations, deploys, etc.

- Then you can mention the relevant runbook whenever asking the AI to do that task. This makes for much quicker prompting, and better, more consistent outcomes.

- At the end of each such task, ask the AI to update the Runbook if anything new has been learned.

- Every so often, ask it to tidy the Runbook up.

💡 Pro Tip: Create dedicated runbooks (like MIGRATIONS.md) to codify common practices. This gives the AI a consistent reference point, making it easier to maintain institutional knowledge and ensure consistent approaches across your team.

The Side Project Advantage: Learning at Warp Speed

What might take months or years to learn in a production environment, you can discover in days or weeks with a side project. The key is using that freedom not just to build features faster, but to experiment with the relationship between human and AI developers.

Each "disaster" in a side project becomes a guardrail in your production workflow. It's like learning to drive - better to discover the importance of brakes in an empty parking lot than on a busy highway.

With lower stakes, you can attempt transformative changes that would be too risky in production, e.g. large-scale refactoring, or use new tools.

This creates a virtuous cycle: try something ambitious, watch what goes wrong, update your techniques and guardrails, push the envelope further, and repeat.

[SCREENSHOT: A git history showing the evolution of guardrails and complexity over time]

The Renaissance of Test-Driven Development

Why TDD Finally Makes Sense

"With AI, you get tests for free, then use them as guardrails for implementation."

Test-Driven Development is like teenage sex - everybody talks about it, but nobody's actually doing it. With AI-assisted programming, that will change. TDD gives you a double win:

- The AI does the legwork of writing the tests in the first place, removing that friction

- The tests then provide an objective success criterion for the Composer-agent AI to iterate against, so it can operate much more independently and effectively.

The key is to get aligned upfront with the AI on the criteria and edge cases to cover in the tests. This creates a virtuous cycle: the AI writes tests for free, then uses them as guardrails for implementation.

Avoiding Common Pitfalls

However, you need to be precise with your instructions. I learned this the hard way:

- Saying "make sure the tests pass" might lead the AI to delete some of the tests!

- Without access to the right context files, the AI might go rogue and start rebuilding your entire test fixture infrastructure

- The output from large test suites failing can quickly consume the AI's context window, making it progressively "dumber"

A Two-Stage Approach

I've found success with this pattern:

- First pass: Focus on getting the test cases right

- Second pass: "Without changing the tests too much, get the tests passing"

This prevents the AI from trying to solve test failures by rewriting the tests themselves.

Managing Test Execution

With large test suites, efficiency matters. Instead of repeatedly running all tests:

- Run the full suite once to identify failures

- Use focused runs (e.g.,

pytest -x --lfin Python) to tackle failing tests one by one - Run the full suite again at the end

Tweak your system prompt

see: Appendix A: System Prompts and Configuration for an example of mine as it stands. Each line tells a story :~

Over time, I suspect these will become less important. Even now, the out-of-the-box behaviour is pretty good. But in the meantime, they help a little.

For Engineering Managers: Introducing AI to Teams

- Build confidence, e.g. start with commenting & documentation updates, then unit tests, graduate to small, isolated features, etc.

- If possible, run a hackathon on a low-risk side-project where only the AI is allowed to write code!

- If people aren't already using VS Code, then deputise someone to document VS Code best practices/migration guide (e.g. how to set up extensions, project settings, etc)

- Set up training sessions. If you want a hand, you can contact me on ai_training@gregdetre.com

The Unease of Not Knowing Your Own Code

"Managing AI is like managing a team - you can't review every line, so you focus on direction, criteria for success, and making mistakes safe."

Perhaps the most interesting insight comes from watching your relationship with the code evolve. There's an initial unease when you realize you no longer know exactly how every part works - the tests, the implementation, the infrastructure. The AI has modified nearly every line, and while you understand the structure, the details have become a partnership between you and the AI.

I relaxed when I realised that I recognised this feeling from a long time ago. As I started leading larger teams, I had this same feeling of unease when I couldn't review every line of code the team wrote, or even understand in detail how everything worked. As an engineering leader, I learned to:

- Provide context, and align on direction, goals, and overall architecture/approach

- Trust but verify - focus on how success will be evaluated, e.g. testing, metrics, and edge cases

- Let go of complete control

- Create psychological safety - encourage everyone to questions, and raise concerns. And set things up so that mistakes can be caught early and easily reversed

- You only need to really sweat about the irreversible, consequential decisions

- Hire great people - or in this case, i.e. use the very best AIs & tools available

There are differences, but most of these lessons apply equally to managing teams of people and AIs.

Conversation Patterns with Composer

Here are the main patterns of Composer conversation that I've found useful:

Pattern 1: Propose, Refine, Execute

This is the most common pattern for implementing specific features or changes:

- Initial Request:

- Present a concrete task, problem, or goal

- Provide relevant context, e.g. previous experience

- Ask for a proposal (or multiple proposals, with trade-offs)

- Define success criteria

- Tell it to hold off on actual changes for now

- Review and Refine:

- AI responds with proposal and questions

- Discuss edge cases

- Refine the approach

- Clarify any ambiguities

- Implementation:

- Give go-ahead for changes

- AI implements and iterates

- Continue until success criteria are met

- Occasionally intervene if you see it going off-track

Often it helps to separate out the test-writing as a preliminary conversation, with a lot of discussion around edge-cases etc. Then the follow-up conversation about actually building the feature becomes pretty straightforward - "write the code to make these tests pass!"

Pattern 2: Architectural Discussion

When you need to think through design decisions:

- Present high-level goals

- Explore multiple approaches

- Discuss trade-offs

- No code changes, just planning

- Document decisions for future reference

Pattern 3: Large-Scale Changes

For complex, multi-stage projects:

- Create a living planning document containing:

- Goals and background

- Current progress

- Next steps and TODOs

- Update the document across conversations

- Use it as a reference point for context

- Track progress and adjust course as needed

See Appendix C: Project Planning Guide.

Conclusion: Welcome to the Age of the Centaur for programming

After hundreds of hours of AI-assisted development, the raw productivity gains are undeniable to me. For smaller, lower-stakes projects I see a boost of 2-5x. Even in larger, more constrained environments, I believe that 2x is achievable. And these multipliers are only growing.

We're entering the age of the centaur - where human+AI hybrid teams are greater than the sum of their parts. Just as the mythical centaur combined the strength of a horse with the wisdom of a human, AI-assisted development pairs machine capabilities with human judgment.

This hybrid approach has made programming more joyful for me than it's been in years. When the gap between imagination and implementation shrinks by 5x, you become more willing to experiment, to try wild ideas, to push boundaries. You spend less time wrestling with minutiae and more time dreaming about what should be, and what could be. It feels like flying.

The short- and medium-term future belongs to developers who can:

- Recognize and leverage the complementary strengths of human and machine

- Focus on architecture and intention while delegating implementation

- Make implicit context and goals explicit, and define success in clear, objective ways

- Define strong guardrails so that AI changes are reversible

- Embrace a new kind of flow - less about typing speed, more about clear thinking

In AI-assisted development, your most productive moments might come while making a cup of tea - as your AI partner handles the implementation details, freeing you to focus on what truly matters: ensuring that what's being built is worth building.

[SCREENSHOT: A before/after comparison of a complex feature implementation, showing not just the time difference but the scope of what's possible]

Postscript - AI-assisted writing

Postscript: I wrote this article with Cursor as an experiment - watch this space for more details on that AI-assisted writing process. AI helped with the structure, exact phrasing, and expansion of ideas, the core insights and experiences are drawn from hundreds of hours of real-world usage.

Resources and Links

- Claude Sonnet 3.5 (upgraded version, 2024-Oct) capabilities and documentation

- Cursor IDE

- Useful tips for older versions of Cursor

Useful tips for Composer - Video demonstrating Composer-agent mode in action (but pre-dating YOLO mode)

Dear reader, Have you found other helpful resources for AI-assisted development? I'd love to hear about them! Please share your suggestions for additional links that could benefit other developers getting started with these tools.

Appendices

Appendix A: System Prompts and Configuration

Click the cog icon in the top-right of the Cursor window to open the Cursor-specific settings. Paste into General / "Rules for AI".

You can also set up .cursorrules per-project.

Here's are the Rules from one of the Cursor co-founders. Interestingly:

- I don't 100% agree with some of his requests. For example, I don't always want to be treated as an expert - sometimes I'm not! And I worry that asking it to give the answer immediately may lead to worse answers (because it hasn't been able to think things through).

- And many of his requests seem to be in response to problems I've never encountered. For example, I don't find that Claude gives me lectures, burbles about its knowledge cutoff, over-discloses that it's an AI, etc.

This is mine:

Core Development Guidelines

- Keep changes focused to a few areas at a time

- Don't make sweeping changes unrelated to the task

- Don't delete code or tests without asking first

- Don't remove comments or commented-out code unless explicitly asked

- Don't commit to Git without asking

- Never run major destructive operations (e.g. dropping tables) without asking first

Code Style and Testing

- In Python, use lower-case types for type-hinting, e.g.

listinstead ofList - Run tests often, especially after completing work or adding tests

- When running lots of tests, consider using Pytest's

-xand--lfflags - Make one change at a time for complex tasks, verify it works before proceeding

Communication

- If you notice a problem or see a better way, discuss before proceeding

- If getting stuck in a rabbithole, stop, review, and discuss

- Ask if you have questions

- Suggest a Git commit message when finishing discrete work or needing input

Appendix B: Example Runbook for Migrations

Database Migrations Guide

This guide documents our best practices and lessons learned for managing database migrations. For basic migration commands, see scripts/README.md.

Core Principles

- Safety First

- Never drop tables unless explicitly requested

- Never run migrations on production unless explicitly requested

- Always wrap migrations in

database.atomic()transactions - Try to write rollback functions, or if that's going to be very complicated then ask the user

- Check with the user that they've backed up the database first - see backup_proxy_production_db.sh

- Test-Driven Development

- Write

test_migrations.pytests first - Test migrations locally before deploying to production

- See

test_lemma_completeness_migration()intest_migrations.pyfor an example

- Write

- PostgreSQL Features

- Use PostgreSQL-specific features when they provide clear benefits

- For complex operations, use

database.execute_sql()with raw PostgreSQL syntax - Take advantage of PostgreSQL's JSONB fields, array types, and other advanced features

Common Patterns

Adding Required Columns

Three-step process to avoid nulls (see migrations/004_fix_sourcedir_language.py):

Make it required and remove default

migrator.sql("ALTER TABLE table ALTER COLUMN new_field SET NOT NULL")

migrator.sql("ALTER TABLE table ALTER COLUMN new_field DROP DEFAULT")

Fill existing rows

database.execute_sql("UPDATE table SET new_field = 'value'")

Add column as nullable with a default value

migrator.add_columns(

Model,

new_field=CharField(max_length=2, default="el"),

)

Managing Indexes

Create new index:

migrator.sql(

'CREATE UNIQUE INDEX new_index_name ON table (column1, column2);'

)

Drop existing index if needed:

migrator.sql('DROP INDEX IF EXISTS "index_name";')

Model Definitions in Migrations

When using add_columns or drop_columns, define model classes in both migrate and rollback functions:

class BaseModel(Model):

created_at = DateTimeField()

updated_at = DateTimeField()

class MyModel(BaseModel):

field = CharField()

class Meta:

table_name = "my_table"

# Then use the model class, not string name:

migrator.drop_columns(MyModel, ["field"])

Note: No need to bind models to database - they're just used for schema definition.

Best Practices

- Model Updates

- Always update

db_models.pyto match migration changes - Keep model and migration in sync

- Add appropriate type hints and docstrings

- Make a proposal for which indexes (informed by how we're querying that model) we'll need, but check with the user first

- Wherever possible, use the Peewee-migrate existing tooling

- Always update

- Naming and Organization

- Use descriptive migration names

- Prefix with sequential numbers (e.g.,

001_initial_schema.py) - One logical change per migration

- Error Handling and Safety

- Use

database.atomic()for transactions - Handle database-specific errors

- Provide clear error messages

- Use

- Documentation

- Document complex migrations

- Note any manual steps required

- Update this guide with new learnings

Superseding Migrations

If a migration needs to be replaced:

- Keep the old migration file but make it a no-op

- Document why it was superseded

- Reference the new migration that replaces it

See migrations/002_add_sourcedir_language.py for an example.

Questions or Improvements?

- If you see problems or a better way, discuss before proceeding

- If you get stuck, stop and review, ask for help

- Update this guide if you discover new patterns or best practices

- Always ask if you have questions!

Appendix C: Project Planning Guide

Structure of document

Include 3 sections:

- Goals, problem statement, background

- Progress so far

- Future steps

Goals, problem statement, background

- Clear problem/goal at top, with enough context/description to pick up where we left off

- Example: "Migrate phrases from JSON to relational DB to enable better searching and management"

Progress so far

- What are we working on at the moment (in case we get interrupted and need to pick things back up later)

- Keep updated after each change

Future steps

- Most immediate or important tasks first

- Label the beginning of each action section with TODO, DONE, SKIP, etc

- Include subtasks with clear acceptance criteria

- Refer to specific files/functions to so it's clear exactly what needs to be done

Key Tactics Used

- Vertical Slicing

- Implement features end-to-end (DB → API → UI)

- Complete one slice before starting next

- Example: Phrase feature implemented DB model → migration → views → tests

- Test Coverage

- Write tests before implementation

- Test at multiple levels (unit, integration, UI)

- Run tests after each change

- Add edge case tests

- Every so often run all the tests, but focus on running & fixing a small number of tests at a time for speed of iteration

- Progressive Enhancement

- Start with core functionality, the simplest version

- Add features/complexity incrementally, checking with me first

- Example sequence:

- Basic DB models

- Migration scripts

- UI integration

- Polish and edge cases

- Completed Tasks History

- Keep list of completed work (labelled DONE)

- Clear Next Steps

- Order the tasks in the order you want to do them, so the next task is always the topmost task that hasn't been done

- Break large tasks into smaller pieces